tdlib-telegram-bot-api-docker

Purpose of the project

Produce working, minimal Docker image for the Telegram Bot API server together with easy to use pipeline generating builds on the changes within the main repository managed by Telegram team.

This project does not modify any part of the tdlib/telegram-bot-api code.

Issues

As I do not modify any part of the server code I am not responsible for the way it works. For that purpose you should open an issue on the telegram bot api server issue tracker.

TL;DR: My responsibility ends when container and binary starts.

Build schedule

Build will be triggered automatically once a week to produce the latest version of the Telegram API Server. I would set it for the daily release but every build takes ~2.5 hours and generates additional costs on my side.

Images are versioned in format 1.0.x where x is a build number.

There's additional version tag added, for example api-5.1 where 5.1 is the version of Telegram API supported by the image.

How to use the image

Images created within this project support following architectures: AMD64 and ARM64

Yes, it means you can run them on regular servers and Raspberry Pi 4 and above as well! 🥳

Github authentication

You may need to authenticate with github (see this thread) to pull even the publicly available images. To do so you need to create Personal Access Token with read:packages scope and use it to authenticate your docker client with the Github Docker Registry.

Update: After move to GHCR.io there's no need authenticate and you should be able to pull images without any additional magic.

Docker configuration

docker pull ghcr.io/lukaszraczylo/tdlib-telegram-bot-api-docker/telegram-api-server:latest

docker run -p 8081:8081 -e TELEGRAM_API_ID=yourApiID -e TELEGRAM_API_HASH=yourApiHash -t ghcr.io/lukaszraczylo/tdlib-telegram-bot-api-docker/telegram-api-server

Thing to remember: Entrypoint is set to the server binary, therefore you can still modify parameters on the go, as shown below

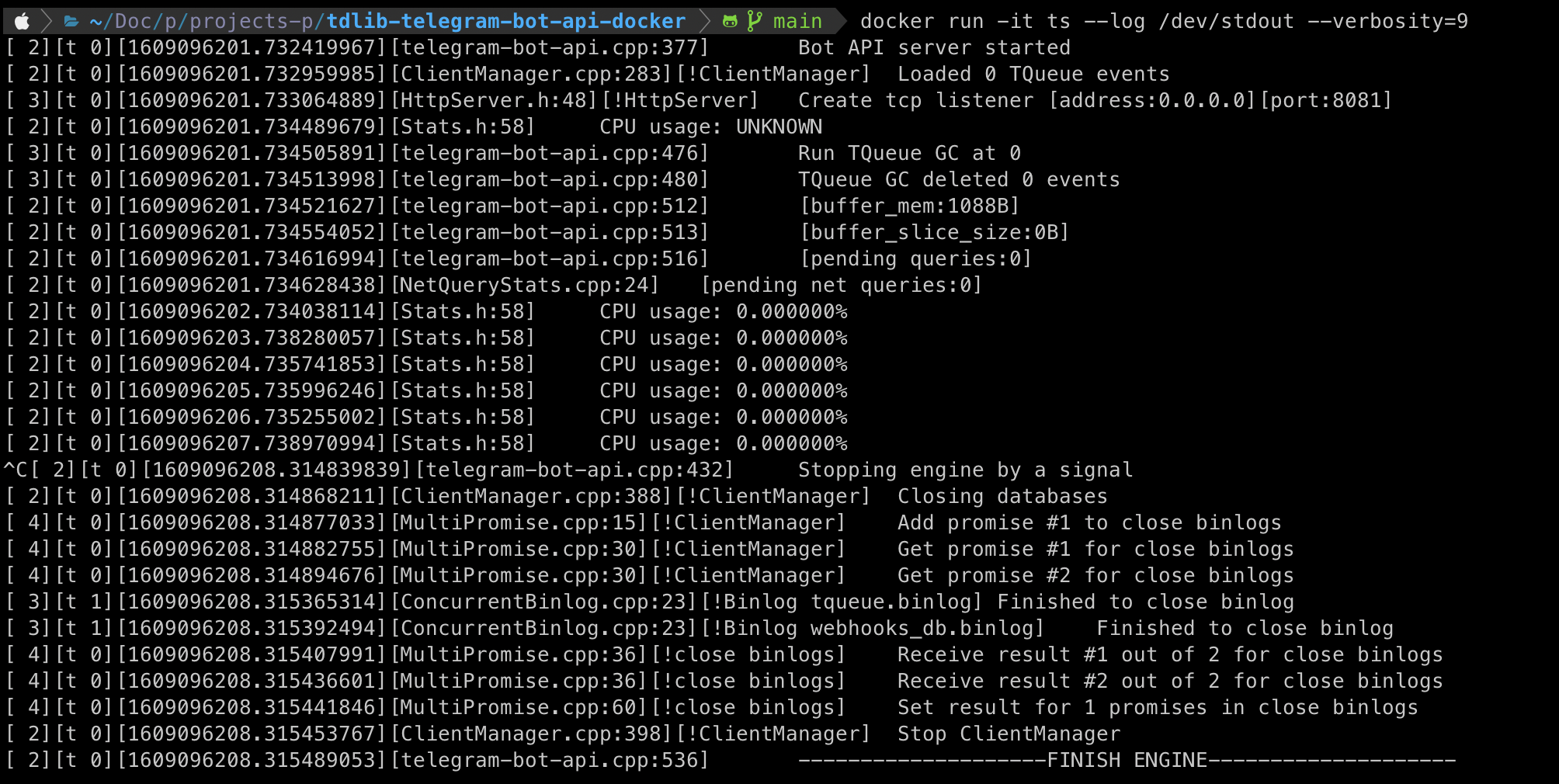

Setting the log output and verbosity

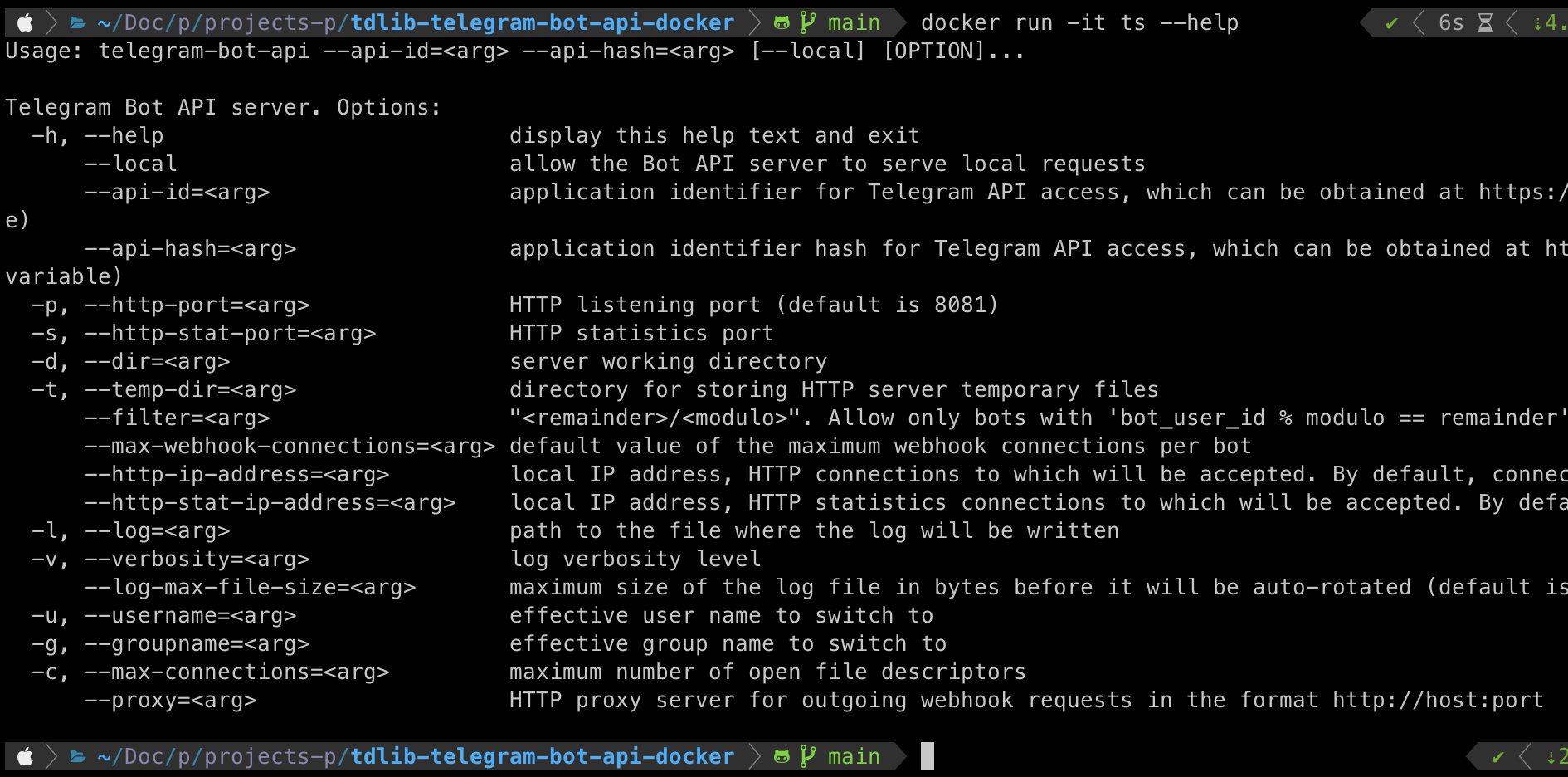

Printing out the help

Kubernetes configuration

Example deployment within kubernetes cluster

# apiVersion: v1

# kind: PersistentVolumeClaim

# metadata:

# name: telegram-api

# spec:

# accessModes:

# - ReadWriteMany

# storageClassName: longhorn

# resources:

# requests:

# storage: 5Gi

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: telegram-api

labels:

app: telegram-api

spec:

selector:

matchLabels:

app: telegram-api

replicas: 2

template:

metadata:

labels:

app: telegram-api

spec:

containers:

- name: bot-api

image: ghcr.io/lukaszraczylo/tdlib-telegram-bot-api-docker/telegram-api-server:latest

imagePullPolicy: Always

args: [ "--local", "--max-webhook-connections", "1000" ]

resources:

requests:

cpu: 100m

memory: 100Mi

limits:

cpu: 500m

memory: 500Mi

ports:

- containerPort: 8081

protocol: TCP

name: api

env:

- name: TELEGRAM_API_ID

value: "xxx"

- name: TELEGRAM_API_HASH

value: "yyy"

# volumeMounts:

# - name: shared-storage

# mountPath: /data

# volumes:

# - name: shared-storage

# persistentVolumeClaim:

# claimName: telegram-api

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: node-role.kubernetes.io/control-plane

operator: DoesNotExist

- key: node-role.kubernetes.io/storage

operator: DoesNotExist

- key: node-role.kubernetes.io/highmem

operator: Exists